As the ML / AI field matures and sophisticated models get deployed across new (and critical) industries, new challenges come up. One of the most common of those is the perception that those models are behaving as black boxes – i.e. it is difficult to understand how they work and the reasoning behind their predictions. This is coupled with increasing efforts on removing bias (i.e. discovering that the model uses discriminatory features in the prediction). Both of these lead to rising levels of mistrust in those ML / AI systems. The field that tackles these problems is called explainable AI, or xAI for short. In this blog post I will walk you through the motivations behind the field.

Words of caution / Hype

Before getting started, there are a few words of caution I want to provide. While the “black-boxiness” of machine learning is not a new topic, and has been an issue since the first algorithms were created, the new wave of explainability methods is a relatively recent development. Because of this recency, many of the methods in the field have not been thoroughly tested in different environments, and academic work on the topic is also scarce. This is why you should be very careful in using those methods in critical production systems, and study the methods thoroughly before applying them blindly.

Unfortunately xAI is also caught up in the hype and buzzword storm that the general AI field is a victim of. This hype can obscure both the value, and the dangers of using those methods, and make the selection of the best method difficult.

What is xAI?

As a first step let’s define xAI. Even though there are differences between the two terms in the field, for the sake of simplicity, we will be using the words interpretability and explainability interchangeably. The best definition I found is this one:

Interpretability* is the degree to which a human can understand the cause of a decision (Miller, 2017).

We can focus on two specific words in this definition – human and decision. This shows that the most important idea of the field is to help humans understand machine learning systems.

Why do we need it

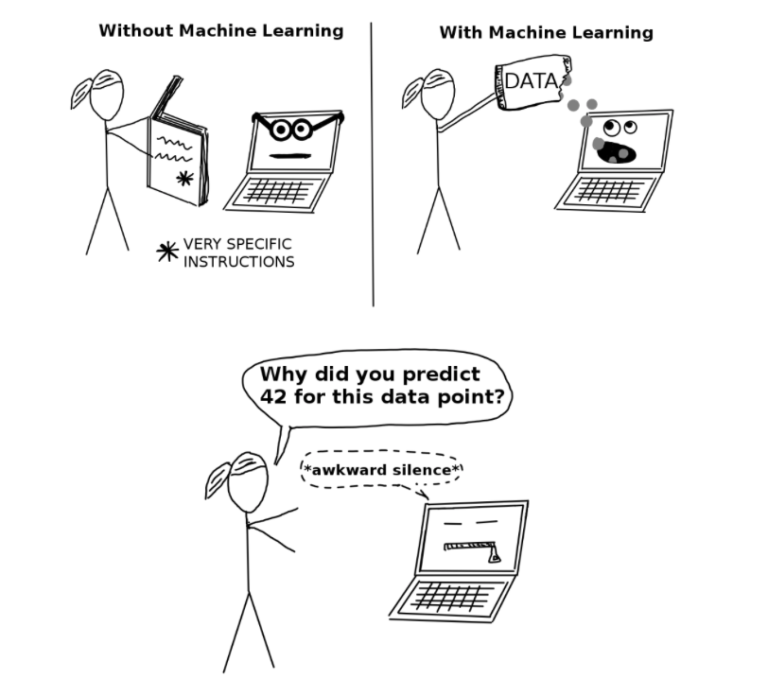

In the computing world before the advent of machine learning, the decisions made by machines were executed in a very strict fashion. This made the results of such programs much clearer to understand, since all you had to do is to understand the source code. Nowadays, however, even experienced data scientists might struggle with explaining the predictions rationale of their models, and the process seems like magic – give the data, add a target for the prediction (decision) and get the result – with nothing in between to show you a hint of the decision making process. This issue is illustrated in the drawing below:

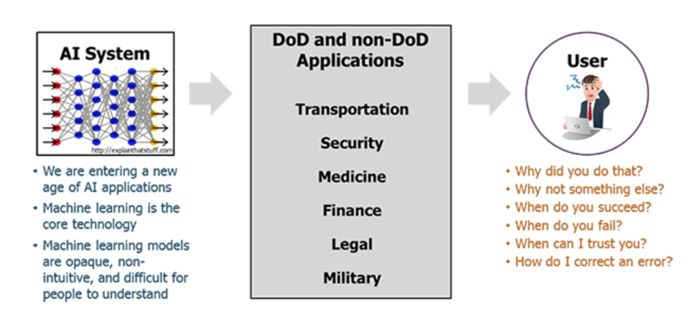

Another very important motivating factor for the development of the xAI field is the increased maturity of production grade ML systems in critical industries. While around 2009 most of the machine learning systems deployed were within the products of tech-first companies (i.e. Google, Youtube), where a false prediction would result in the wrong recommendation shown to the application user, nowadays those algorithms are deployed in sectors such as the military, healthcare and finance. The results of predictions in those new AI industries can have far reaching and dramatic consequences on the lives of many people – thus it is imperative we know how those systems make their decisions.

DoD stands for Department of Defense.

Connected to this topic there are also laws such as the GDPR, and the “right to explanation”. It can be a legal obligation on the part of a data scientist who deploys a machine learning model to production to explain how it makes decisions if this decision can have a large impact on people.

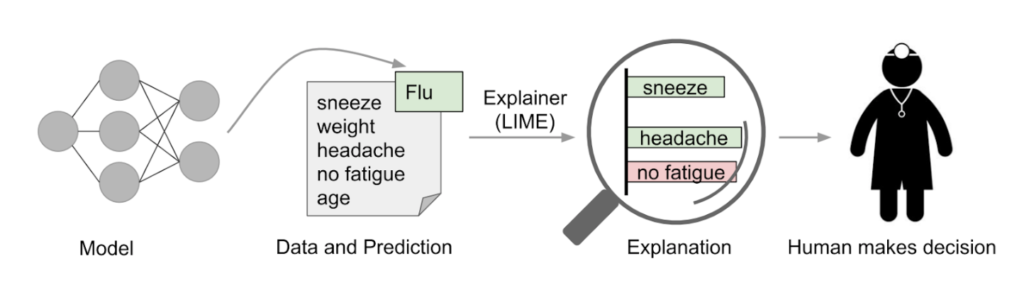

xAi can also be beneficial to the end users of applications as well. With systems like this in place they will have increased trust. Let’s take an AI-powered healthcare application. What happens often in this case is that the technical team reports the model performance to the domain experts (or the application users, in this case). The engineers report that the model achieves 95% accuracy on predicting whether a patient has a certain disease or not. Most of the time the healthcare practitioners would be incredulous of such results – saying that it is simply not possible to be that accurate. If in this case we use a method such as Local Interpretable Model-agnostic Explanations (LIME) to explain why a certain patient is classified as not being sick the level of trust in the system should be improved. The doctors should be able to see that the model has a very similar logic to theirs when providing a diagnosis. This scenario is illustrated below, where the model shows that even if the patient has some symptoms, such as sneezing and headache, they are not sick because they exhibit no fatigue.

So now we went through the fundamentals of xAI and its opportunities. In the upcoming posts, we will share what methods and associated software tools are available for xAI.