Twitter bots and fake social media accounts made the headlines back in 2016 when they were proven to have shifted the US election results. This year, a new group of presidential candidates are fighting for the seat in the White House. They are also actively using Twitter as their communication channel. It made me wonder, what does it take to credibly fake someone’s online presence and how far has technology advanced in recognizing the culprits since the last election? Would machine learning help in detecting fake text?

Text classifier: real Trump or twitter bot?

To start with this fun project, I decided to first build a classifier to try distinguishing real Trump tweets from fakes. In the following paragraphs, you can see the steps I took from downloading the data to preprocessing to classification.

I’ve loaded a couple thousand tweets from the following accounts: the actual Trump twitter (@realDonaldTrump), the parody Trump (@RealDonalDrumpf) and the best deep fake bot account that I could find – (@DeepDrumpf).

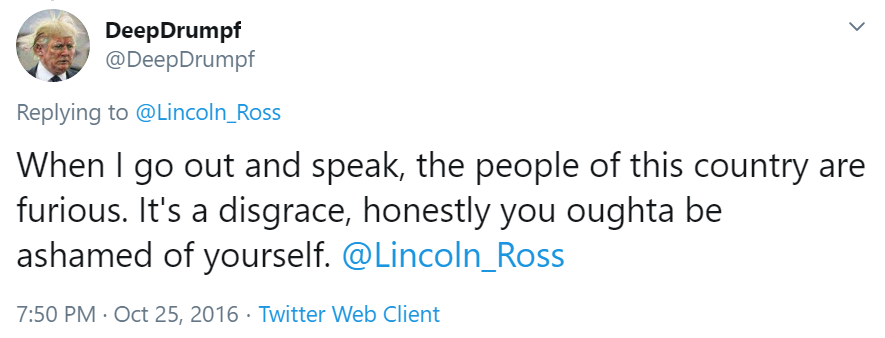

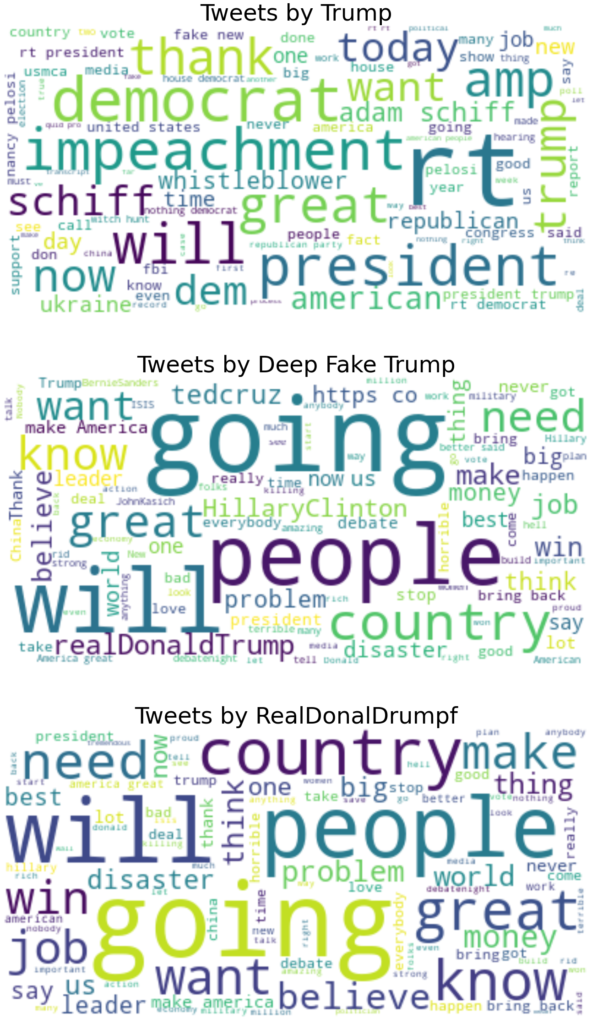

Next, I removed stopwords, extra punctuation, mentions and links. Then I visualised the collections of tweets per each account to reveal any oddities and most commonly used words. I have not removed the retweet sign “rt” as I found that it could be an indicator of tweeting style. Also the bots might not be trained to retweet the same way as Donald Trump.

The difference between the real Trump’s vocabulary and the fake text accounts is quite apparent. In fact, I looked at the top 100 words used by each 3 accounts. I found that there is nothing in common between real Trump and the parody and deep fake Trump accounts. However, the fakes themselves share a great deal of vocabulary. In particular (and not very surprisingly), on the top of the list we see GREAT, COUNTRY, MAKE, AMERICA.

It is noteworthy that Deep Fake Trump bot was discontinued in 2017. Therefore its tweets might capture the style, but not the current vocabulary of Mr Trump.

Tweet text vectorization and classification

Before proceeding to classification, I tokenized and lemmatized the tweets using spaCy. In practical terms, it means splitting tweets into lists of word-strings and normalizing them, by reducing the inflected word to its common base. So, for example “making” and “made” become “make” and are analysed as one unit of vocabulary. Subsequently, I vectorized the resulting set of tokens using Bag of Words (CountVectorizer in sklearn) and TF-IDF (TfidfVectorizer in sklearn) methods. For the classifier, I chose LinearSVC that tends to work well on NLP classification problems. High-dimensional text data and support vectors are a match made in heaven.

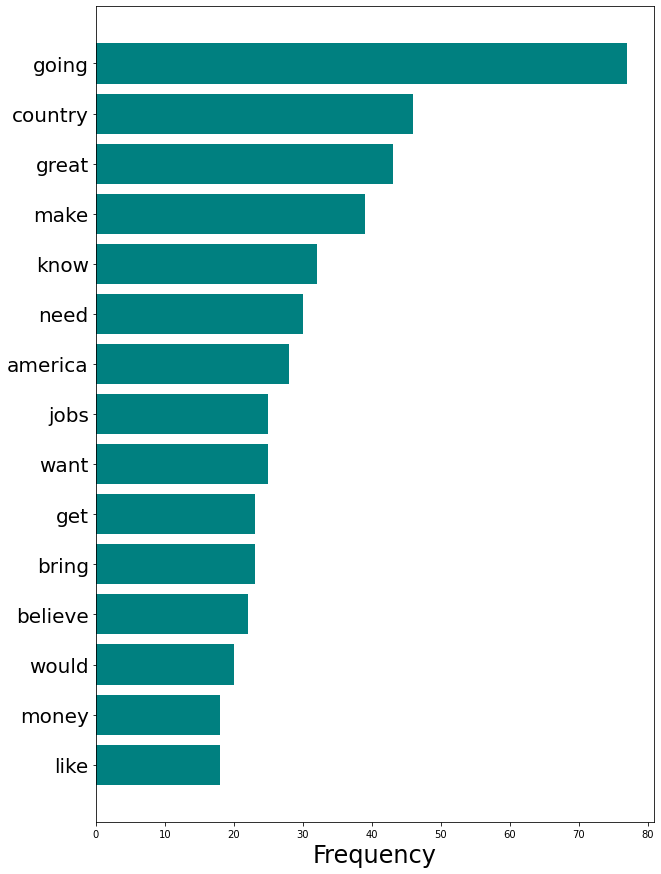

For the two vectorization methods I’ve got the following results using LinearSVC (Chart 2):

The accuracy of predictions of whether the tweets contain real or fake text is high in all 4 cases. The TF-IDF method has proven to give slightly better results than classification with Bag of Words vectorization. All in all, it is relieving that the algorithm was better at distinguishing between the real and bot account, than between the actual US president tweets and the human-lead parody account. This goes to show that algorithmic text generation has not yet been perfected. It is not so easy to fool either machines, or humans when it comes to assessing genuinity of public figures’ written communication online.

Conclusion & next steps

Machine learning is tremendously helpful in classification tasks. However, when it comes to language, it seems that conducting linguistic and social media pattern analysis yields more comprehensive results than throwing a black-box solution at the problem. In my process, the differences between the accounts could have been spotted already when analyzing the vocabulary. Others have also looked at alternative ways to spot a bot, like their activity. Bots tend to tweet too often and at very specific times (like every full hour), while humans tweet around 10-15 times a day at random times.

As the next step, I would like to employ more advanced analysis to decode what it means to tweet like Donald Trump.

Stay tuned!